Action Step and Orientation

A2. Use assessment data to identify students at risk for literacy difficulties.

This lesson focuses on how educators can use assessment data to identify students who are at risk for literacy difficulties.

Part 1 gives an overview of the process for identifying students at risk for literacy difficulties as part of a response to intervention (RTI) framework.

In Part 2, you will consider what instructional decisions are made when students are identified as at risk and the criteria used to make this determination.

In Part 3, you will explore the issues of reliability and validity in using data to identify students who are, and are not, in need of literacy intervention instruction.

To get started, download the Implementation Guide for this component and refer to the Action Step for this lesson. Examine the Implementation Indicators for each level of implementation and note the Sample Evidence listed at the bottom of the chart.

Part 1—Identifying Students At Risk

RTI is often referred to as a preventative model because the aim is to identify students who are at risk for reading difficulties in the future by providing needed support early to help students before difficulties arise or get worse.

At the heart of this process is assessment. Within an RTI framework, assessment takes many forms. Action Step A2 focuses on assessment for the purpose of identifying students at risk for literacy difficulties. This kind of assessment is commonly called universal screening.

Literacy screenings are similar to medical screenings in many ways. They focus on key indicators of risk. For example, blood pressure screening is done at most doctor visits because blood pressure is one of the factors shown to indicate risk for coronary artery disease (heart disease), a major cause of death in our country. Doctors can identify patients who are at risk by reviewing blood pressure readings. If a patient has a reading outside the range determined to be healthy, the doctor can begin to take appropriate steps to address the patient's possible risk for heart disease. Without the blood pressure reading, the patient's condition would likely go undetected until it was much more difficult to treat.

Similarly, reading screenings focus on skills that are related to a student's literacy development. Screening in secondary school often looks very different than screening in elementary school. For example, in the early grades, letter-naming fluency is often a target skill assessed because it has been shown to indicate future success in learning to read. Therefore, screening and identifying students who struggle with this skill as early as possible alerts school staff to begin intervening with these students before they fall behind their peers in their reading development.

However, in secondary school, the screening process is very different. A common method of screening in secondary school is looking at cut scores and student performance on state-level assessments. State-level assessments are a good use of data for screening at the secondary level because they are comparable across grades (i.e., one can look at performance from 7th–10th grades and compare changes). State-level assessments provide a data source that is useful for all districts and can provide valuable information when screening a student for whom you have no other accessible data.

By the time students reach middle or high school, most with learning difficulties have already been identified. Many may have received intervention or remedial instruction for the length of their academic careers. Instead of using a single skill-based screening measure (e.g., word reading, letter naming, etc.) as one might in the early grades, secondary students are screened in other ways. These may include having the students read grade-level passages and asking them to answer questions. While these types of assessments may help confirm those students at the secondary level who are in need of continued intervention, they will also help identify any students in need of extra support who have thus far gone unidentified. While this is rare at the secondary level, it is an important step in the screening process.

Within RTI, screening is called "universal" because all students are assessed. Just as the doctor will want her patients to have their blood pressure reading taken at least once every year, universal reading screening should also take place on a regular basis as well. In the early grades, schools conduct screening at the beginning of the year (BOY), middle of the year (MOY), and end of the year (EOY) to catch reading problems that can occur in those intervals.

In secondary schools, MOY assessments are less common. Often schools give mid-semester evaluations, end-of-course exams, and 6- or 9-week tests. These assessments are typically informal—produced without the benefit of professional analysis—and their purpose is to measure mastery of the curriculum that has been taught. However, these assessments can provide valuable screening for older students to identify those who are struggling to master basic literacy skills. Secondary schools often have a system in which low grades or assessment scores trigger action such as participation in tutoring or meeting with counselors or mentor teachers. This approach fits well with the goal of screening within an RTI framework: to call attention to a potential need for intervention as early as possible and seek the appropriate way to address that need. Determining how to address that need is the focus of Lesson A3—Determining instructional needs.

If you do use formal screening assessments, they need to be relatively inexpensive and quick to administer. This is the case because of the need to administer them to all students. In order to produce valid and reliable results, the procedure for administration of screening measures should be standardized, and training for those who conduct the assessments is essential. Just as the nurse or assistant must learn to use equipment properly and listen for the systolic and diastolic pressure, whoever administers the literacy screenings at your school must be well trained in the standardized assessment procedures and be able to demonstrate reliable assessment administration.

A health screening can lead to lifesaving treatment. Similarly, screening students on target literacy skills and intervening with students who struggle with these target skills can lead to not only saving students' academic careers, but also ensuring they have the literacy skills needed for a happy and fruitful future beyond the classroom.

Part 2—Using Data to Make Instructional Decisions for Students Identified as At Risk

The purpose of each assessment should be closely related to the instructional decision that will be made using the data from that assessment. The Action Steps in the Assessment component of the TSLP are organized around these purposes and provide a key reminder to educators to keep the purpose in mind when using assessment data. In the medical analogy, to save both resources and time, we would like our doctors to have in mind how they will use the results of tests they order for us.

You and your team can use your Assessment Audit to guide your discussion about the instructional decisions made from the screening assessments administered at your campus. For each instrument, discuss these questions:

- What are the data from this instrument used for? In other words, what decisions will be made based on this data?

- Are the criteria for this decision defined?

- If yes, are the criteria communicated to teachers and other stakeholders?

- Are the criteria implemented routinely?

You can refer to the third column of the Assessment Audit form for this discussion.

What decisions will be made based on this data?

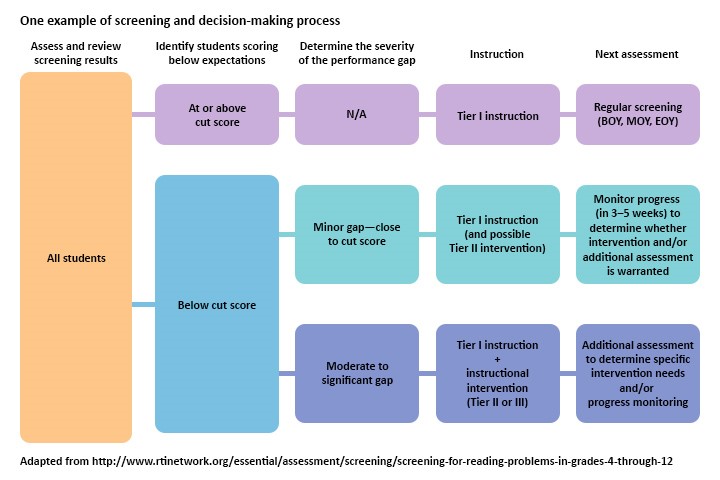

Once screening is completed, the most important decision you and your colleagues need to make is what to do for students who are identified in the screening process as at risk for reading difficulties. This graphic shows a guide to one way of using screening data in an RTI framework:

Students are identified as at risk based on their scores on the screening assessment compared to the expected level of performance for students in their grade level (i.e., norm-referenced). This expected level of performance is called a cut score. Cut scores (which will be discussed further in the next section) are used to identify which students are not at the target level for a particular skill. The severity of the gap between the cut score and a student's score should determine what steps you take next.

As in the example, you may decide to simply monitor students who scored close to the cut score to see if Tier I instruction alone has sufficiently provided the support needed to get back on track for meeting end-of-year goals. For students who fall further below the cut score on the screening assessment, you may decide to conduct additional assessments to determine the students' specific intervention needs. This kind of assessment is the focus of Action Step A3 and is discussed in Lesson A3—Determining instructional needs.

Throughout this process, teachers making decisions about how to intervene with students identified through the screening as needing additional support may want to look at additional data such as results from previous years (when available), English language proficiency, and educational history. Often this kind of data is limited when students are in early grades, but by secondary school, there should be a considerable amount to view. Looking at multiple sources of information about a student's literacy achievement and needs is the most effective way to ensure valid data and valid decisions.

The example mentioned above is just one way the decision-making process can work for using screening data. Some schools may do things differently, such as providing intervention instruction immediately to all students whose screening results place them in the at-risk level. There are acceptable variations in the implementation of RTI. The guiding principle is to use reliable data in valid ways to help students avoid or reverse the educational equivalent of heart disease: problems in reading that become more and more difficult to overcome as each year goes by.

What criteria will be used for these decisions?

For screeners to be helpful, they have to classify students as either at risk or not at risk. The score that separates "at risk" from "not at risk" is the cut score (mentioned earlier) and is typically established by the publishers of the screening instrument. Some districts decide to set cut scores themselves if they feel the scores based on national norms do not reflect the local student population. The Center on Response to Intervention (2013) cautions, however, that "this process requires a sufficient sample of students and someone with statistical expertise to conduct the analysis" (Brief #2, p. 3).

When implementing universal screening as a part of RTI, many schools find that the screening results identify large percentages of students in the at-risk category. This can occur for several reasons.

Because the goal of screening within an RTI framework is to catch a gap between target skill levels and a student's performance that is just in the making—unnoticed in regular classroom performance—the cut scores tend to be more stringent than lenient. Test publishers, and most school leaders, would rather err on the side of identifying too many students than miss students who need assistance (Center on Response to Intervention, 2013, Brief #2, p. 3). If you do have a significant number of students who fall below the screening cut scores, it will be important to look at individual students and use other data sources, mentioned above, to help determine if they are at risk or if there may have been other issues interfering with either the students' performance or a reliable administration of the assessment.

On the other hand, a large percentage of students scoring in the at-risk category may actually show a true picture—that there really are many students with gaps in key reading skills. This situation points toward a need for improvement in the general education classroom, for example, Tier I instruction in language arts. You may use this information for the purpose of evaluating your literacy instruction program, which is discussed in more detail in Lesson A5—Evaluating overall literacy performance.

In both cases, a school with a large number of students identified as at risk will need to take a closer look using multiple sources of information to make decisions about how to best use its resources to help each student succeed.

More complex than simple cut scores, decision trees show a series of questions or criteria to determine the best route to serving a student's instructional needs. The decision tree might unite the at-risk cut scores for multiple assessments, as described in this report on using evidence-based decision trees instead of formulas to identify at-risk readers. Or a decision tree may show the use of different kinds of data that inform the RTI process, such as in this locally developed process. One of the main advantages of decision trees is that they can integrate multiple sources of data into the process as they clearly outline the criteria to be used and the possible decisions that can be made based on those criteria. Decision trees can also help in prioritizing and differentiating among students when a large group is identified as at risk.

Part 3—Ensuring Validity and Reliability of Screening Data

The decisions that you make depend upon valid and reliable data. For each instrument you use to acquire screening data, you and your team will want to discuss the following questions:

Is this data valid for identifying students at risk?

Is the data reliable?

Is the instrument appropriate for students at this grade level and age?

Is the instrument appropriate for all students' language proficiency and language of instruction?

You can refer to the fourth column of the Assessment Audit form for this discussion.

An important source of information for this discussion is the instruction manual for each assessment. Publishers typically provide descriptions of the intended use and application of the assessment instruments. To obtain data that is valid, the construct of the instrument should match your purpose—in this case, to identify students at risk. You can also use the screening tools chart from the Center on Response to Intervention, accessible from the link in the To Learn More section below, to learn about the reliability, validity, classification accuracy, and other features of many commonly used screeners,

Test administration, scoring, and data management are the main concerns with regard to reliable data (Center on Response to Intervention, 2013, Brief #4). An inconsistency in the administration of screeners can seriously compromise your ability to identify students in need of intervention. As mentioned previously, regular training for everyone who administers screeners should be mandatory. Again, refer to the assessment manual for guidance. For assessment instruments that require some subjective judgment, you may consider more intensive trainings that include mock testing and feedback. Such training may be available through the test publisher.

Training is also needed to avoid inconsistencies in scoring. To avoid unintended bias, your process could include replacing student names with ID numbers or, when possible, assigning evaluators who are unfamiliar with the students whose assessments they are scoring. Providing written scoring procedures, guidelines, and rubrics can also help address this issue. When using the state assessment as a screener, many of these issues of administration and scoring are taken care of. However, you and your staff will need to address these concerns about reliability and validity in the other sources of data to confirm and clarify students’ instructional needs.

After taking steps to ensure reliability and validity of your data, it is worth thinking about how you can find and reduce errors that might come from data entry. For example, consider these questions:

- Where might human error take place?

- If scores are manually entered into a system at some point, how can you test samples to check for accuracy?

- What procedures could help ensure accurate data entry?

- What might necessitate verifying the scores for an individual or group of students, and how might you do that?

Finally, your team will need to consider the appropriateness of each assessment for the age and languages of your students. Typically, screeners identify the age or grade level at which they are designed to be used. If you work with high school students and the screener is valid for grades below and including the high school level (grades 7–9, for example), that screener is valid for the 9th grade students but not students above that level. Often this information is challenging to find for those professionals working with secondary school students, so make sure you double check that what you’re using actually measures outcomes for your specific students. If your staff is following these guidelines and screening all students in each grade with the appropriate instrument, you can check off this item in your audit.

Experts encourage using the same screening processes for English learners that are used for their English-proficient peers, but you may need to look at the data differently (Echevarria & Hasbrouck, 2009; Esparza Brown & Sanford, 2011). Research suggests that using screening data together with other sources of information about the English learners' ability to learn and rate of learning may be a more accurate way to identify English learners who are and are not in need of literacy intervention beyond linguistically supportive and differentiated Tier 1 instruction (Project ELITE, Project ESTRE2LLA, & Project REME, in press).

TO LEARN MORE: There are many sources of information about screening students for reading difficulties in secondary schools. Here are some of the best sites for research and resources:

Within the extensive website of the Center on Response to Intervention is a section dedicated to universal screening. Online modules and articles, several cited in this lesson, can be accessed from the Featured Resources bar. One of the many resources there is the center's Screening Tools Chart.

The RTI Action Network has a broad range of resources and articles related to RTI implementation. These in particular can support additional learning about screening for reading difficulties:

- Universal Screening for Reading Problems: Why and How Should We Do This?

- Data-based Decision Making (webinar)

- Screening for Reading Problems in an RTI Framework

- How the EBIS/RTI Process Works in Secondary Schools

NEXT STEPS: Depending on your progress in using assessment data to identify students at risk for literacy difficulties at your campus, you may want to consider the following next steps:

- Discuss how screening data is used to make instructional decisions and how you ensure the validity of the data. You may use the third and fourth columns of the Assessment Audit as described in Part 2 and Part 3 of this lesson.

- Gather and review the administration manuals and procedures for each screening assessment used at your campus.

- Determine which staff members have been trained in administering and scoring the various assessments used at your campus.

- Determine procedures for gathering and sharing additional data to support valid decisions for English learners.

Assignment

A2. Use assessment data to identify students at risk for literacy difficulties.

With your site/campus-based leadership team, review your team’s self-assessed rating for Action Step A2 in the TSLP Implementation Status Ratings document and then respond to the four questions in the assignment.

TSLP Implementation Status Ratings 6-12

As you complete your assignment with your team, the following resources and information from this lesson’s content may be useful to you:

- Refer to Part 1 for an overview of the process of identifying students at risk for literacy difficulties.

- Refer to Part 2 for information about the instructional decisions and criteria used in identifying and addressing the needs of students at risk.

- Refer to Part 3 for information about reliability and validity in identifying students who are, and are not, in need of literacy intervention instruction.

Next Steps also contains suggestions that your campus may want to consider when you focus your efforts on this Action Step.

To record your responses, go to the Assignment template for this lesson and follow the instructions.

References

Center on Response to Intervention (2013). Brief #2: Cut scores. Screening Briefs Series. Washington, DC: U.S. Department of Education, Office of Special Education Programs, Center on Response to Intervention. Retrieved from http://www.rti4success.org/resource/screening-briefs

Center on Response to Intervention (2013). Brief #4: Ensuring fidelity of assessment and data entry. Screening Briefs Series. Washington, DC: U.S. Department of Education, Office of Special Education Programs, Center on Response to Intervention. Retrieved from http://www.rti4success.org/resource/screening-briefs

Echevarria, J. & Hasbrouck, J. (2009). Response to intervention and English learners. CREATE Brief. Retrieved from http://www.cal.org/create/publications/briefs/response-to-intervention-and-english-learners.html

Esparza Brown, J., & Sanford, A. (2011). RTI for English language learners: Appropriately using screening and progress monitoring tools to improve instructional outcomes. Washington, DC: U.S. Department of Education, Office of Special Education Programs, National Center on Response to Intervention. Retrieved from http://www.rti4success.org/sites/default/files/rtiforells.pdf

Fuchs, L., Kovaleski, J. F., & Carruth, J. (2009, April 30). Data-based decision making. RTI Action Network [Forum]. Retrieved from http://www.rtinetwork.org/professional/forums-and-webinars/forums/data-based-decision-making

Jenkins, J. R., & Johnson, E. (n.d.). Universal screening for reading problems: Why and how should we do this? RTI Action Network. Retrieved from http://www.rtinetwork.org/essential/assessment/screening/readingproblems

Johnson, E. S., Pool, J., & Carter, D. R. (n.d.). Screening for reading problems in an RTI framework. RTI Action Network. Retrieved from http://www.rtinetwork.org/essential/assessment/screening/screening-for-reading-problems-in-an-rti-framework

Johnson, E. S., Pool, J., & Carter, D. R. (n.d.). Screening for reading problems in grades 1 through 3: An overview of select measures. RTI Action Network. Retrieved from http://www.rtinetwork.org/essential/assessment/screening/screening-for-reading-problems-in-grades-1-through-3

Johnson, E. S., Pool, J., & Carter, D. R. (n.d.). Screening for reading problems in grades 4 through 12. RTI Action Network. Retrieved from http://www.rtinetwork.org/essential/assessment/screening/screening-for-reading-problems-in-grades-4-through-12

Koon, S., Petscher, Y., & Foorman, B.R. (2014). Using evidence-based decision trees instead of formulas to identify at-risk readers (REL 2014-036). Washington, DC: U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance, Regional Educational Laboratory Southeast. Retrieved from http://ies.ed.gov/ncee/edlabs/regions/southeast/pdf/REL_2014036.pdf

Project ELITE, Project ESTRE2LLA, & Project REME. (in press). Assessment and data-based decision making within a multitiered instructional framework. Washington, DC: U.S. Office of Special Education Programs.

Reed, D., Wexler, J., & Vaughn, S. (2012). RTI for reading at the secondary level. New York, NY: The Guilford Press.